This blog post has not been written by an AI. This blog post has been written by a human intelligence pursuing a PhD in Artificial Intelligence. Although, the first sentence seems to be trivial it might not be so in the near future. If we can no longer distinguish a machine from a human during a phone call conversation, as Google Duplex has promised, we should start to be suspicious about textual content on the web. Bots are already made responsible for 24% of all tweets on Twitter. Who is responsible for all this spam?

But really, this blog post has not been written by an AI — trust me. If it were, it would be much smarter, more eloquent and intelligent, because eventually AI systems will make better decisions than humans. And the whole argument about responsible AI, is more of an argument about how we define better in the previous sentence. But first let’s point out, that the ongoing discussion about responsible AI often conflates at least two levels of understanding algorithms:

- Artificial Intelligence in the sense of machine learning applications

- General Artificial Intelligence in the sense of an above-human-intelligence system

This blog post does not aim to blur the line between humans and machines, neither does it aim to provide answers to ethical questions that arise from artificial intelligence. In fact, this blog post simply tries to contrast the conflated layers of AI responsibility and presents a few contemporary approaches at either of those layers.

Artificial Intelligence in the sense of machine learning applications

In recent years, we have definitely reached the first level of AI that does already present us with an ethical dilemma in a variety of applications: Autonomous cars, automated manufacturing and chatbots. Who is responsible for the accident by self-driving car? Can a car decide in face of a moral dilemma that even humans struggle to agree on? How can technical advances can be combined with education programs (human resource development) to help workers practice new sophisticated skills so as not to lose their jobs? Do we need to declare online identities (is it a person or a bot?). How do we control for manipulation of emotions through social bots?

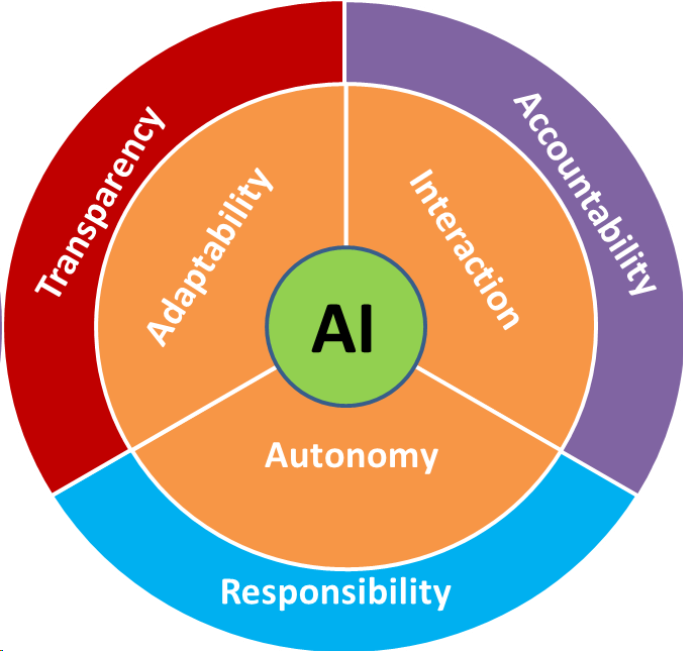

These are all questions that we are already facing. The artificial intelligence that gives rise to these questions is a controllable system, that means that its human creator (the programmer, company or government) can decide how the algorithm should be designed such that the resulting behaviour abides whatever rules follow from the answers to the given questions. The responsibility is therefore with the human. The same way we sell hammers, which can be used as a tool or abused as a weapon, we are not responsible for malicious abuse of AI systems. Whether for good or bad, these AI systems show adaptability, interaction and autonomy, which can be layered with their respective confines.

Autonomy has to act within the bounds of responsibility, which includes the chain of responsible actors: If we give full autonomy to a system, we cannot take responsibility for its actions, but as we do not have fully autonomous systems yet, the responsibility is with the programmers followed by some supervision, which normally follows company standards. Within this well-established chain of responsibility that is in place in most industrial companies, we need to locate the responsibilities for AI system with respect to their degree of autonomy. The other two properties, adaptability and interaction, do directly contribute to the responsibility we can have over a system. If we allow full interaction of the system, we lose accountability, hence we give away responsibility again. Accountability cannot only be about the algorithms, but about the interaction must provide an explanation and justification to be accountable and consequently responsible. Each of these values is more than just a difficult balancing act, they pose intricate challenges in their very definition. Consider explainability of accountable AI, we already see the surge of an entire field called XAI (Explainable Artificial Intelligence). Nonetheless, we cannot simply start explaining AI algorithms on the basis of their code for everyone, firstly we need to come up with a feasible level of an explanation. Do we make the code open-source and leave the explanation to the user? Do we provide security labels? Can we define quality standards for AI?

The latter has been suggested by the High-Level Expert Group on AI of the European AI Alliance. This group of 52 experts includes engineers, researchers, economists, lawyers and philosophers from an academic, non-academic, corporate and non-corporate institutions. The first draft on Ethics Guidelines For Trustworthy AI proposes guiding principles, investment and policy strategies and in particular advises on how to use AI to build an impact in Europe by leveraging Europe’s enablers of AI.

On the one hand, the challenges seem to be broad and coming up with ethics guidelines that encompass all possible scenarios appears to be a daunting task. On the other hand, all of these questions are not new to us. Aviation has a thorough and practicable set of guidelines, laws and regulations that allow us to trust systems which already are mostly autonomous and yet we do not ask for an explanation of its autopilot software. We cannot simply transfer those rules for all autonomous applications, but we should concern ourselves with the importance of those guidelines and not condemn the task.

General Artificial Intelligence in the sense of an above-human-intelligence system

In the previous discussion, we have seen that the problems that arise from the first level of AI systems does impact us today and that we are dealing with those problems one way or the other. The discussion should be different if we talk about General Artificial Intelligence. Here, we assume that at some point the computing power of a machine supersedes not only the computing power of a human brain (which is already the case), but gives rise to an intelligence that supersedes human intelligence. At this point, it has been argued that this will trigger an unprecedented jump in technological growth, resulting in incalculable changes to human civilization — the so-called technological singularity.

In this scenario, we no longer deal with a tractable algorithm, as the super-intelligence will be capable of rewriting any sort of rule or guideline it deems to be trivial. There would be no way of preventing the system to breach any security barrier that has been constructed using human intelligence. There are many scenarios which predict that such an intelligence will eventually get rid of humans or will enslave mankind (see Bostrom’s Superintelligence or The Wachowskis’ Matrix Trilogy). But there is also a surge of serious research institutions, which aim to argue for alternative scenarios and how we can align such an AI system with our values. We see that this second level of AI has much larger consequences with questions that can only be based on theoretical assumptions, rather than pragmatic guidelines or implementations.

An issue that arises from the conflation of the two layers is that people tend to mistrust a self-driving car, as they attribute some form of general intelligence to the system that is not (yet) there. Currently, autonomous self-driving cars only avoid obstacles and are not even aware of the type of object (man, child, dog). Furthermore, all the apocalyptic scenarios contain the same sort of fallacy, they argue using human logic. We simply cannot conceive a logic that would supersede our cognition. Any sort of ethical principle, moral guideline or logical conclusion we want to attribute the AI with, has been derived from thousands of years of human reasoning. A super-intelligent system might therefore evolve this reasoning within a split second to a degree of which it would takes us another thousands of years understanding this step. Therefore, any sort of imagination we have about the future past the point of a super-intelligence is as imaginative as religious imaginations. Interestingly, this conflation of thoughts has lead to the founding of “The Church of Artificial Intelligence”.

Responsibility at both levels

My responsibility as an AI research is to educate people about the technology that they are using and the technology that they will be facing. In case of technology that is already in place, we have to disentangle the notion of Artificial Intelligence as an uncontrollable super-power, which will overtake humanity. As pointed out, the responsibility for responsible AI is with the governments, institutions and programmers. The former need to set guidelines and make sure that they are being followed, the latter two need to follow them. At this stage, it is all about the people to create the rules that they want the AI to follow.

Artificial intelligence is happening and it will not stop merging with our society. It is probably the strongest change of civilization since the invention of the steam engine. On the one hand, the industrial revolution lead to great progress and wealth for most of humankind. On the other hand, it lead to great destruction of our environment, climate and planet. These were consequence we did not anticipate or were willing to accept, consequences which are leading us to the brink of our own extinction if no counter-action will be taken. Similar will be true for the advent of AI that we are currently witnessing. It can lead to great benefits, wealth and progress for most of the technological world, but we are responsible that the consequence are not pushing us over the brink of extinction. Even though we might not be able to anticipate all the consequences, we as the society have the responsibility to act with caution and thoroughness. To conclude with Spiderman’s Uncle’s words “with great power comes great responsibility”. And as AI might be the greatest and last power to be created by humans, it might be too great of a responsibility or it will be smart enough to be responsible for itself.

Layers of Responsibility for a better AI future was originally published in AI for People on Medium, where people are continuing the conversation by highlighting and responding to this story.